The Insurtech world is going through one of its most contradictory moments yet. Funding has dropped sharply (Gallagher Re, Global InsurTech Report), several high-profile vendors have downsized or shut their doors, and regulators are tightening their grip on AI. Yet the marketing claims have never been louder. Every week, someone promises “full automation,” “instant compliance,” or “no adjusters needed.”

It makes for great headlines, but it doesn’t match how insurance actually works.

Insurance is a regulated industry where judgment, accountability, and fairness matter as much as speed. When a vendor claims their AI can replace human oversight, interpret multi-state regulations automatically, or make binding claims decisions without human review, they aren’t selling innovation. They’re selling risk under the disguise of efficiency.

The industry doesn’t need bigger promises. It needs safer technology. It needs AI that supports underwriters, adjusters, and compliance teams, rather than AI that pretends to replace them. And it needs vendors that can survive long enough to support the very operations they want to automate.

This article offers a clearer view of what’s really happening: where Insurtech hype is drifting into dangerous territory, what responsible AI looks like, what insurers can safely automate, and how to evaluate vendors in a market where stability matters as much as capability.

The Problem: Insurtech Promises Are Getting Louder as Reality Gets Tougher

As market conditions tighten, the gap between what some Insurtech vendors promise and what insurers actually need is widening. Funding has cooled, burn rates remain high, and many startups are under pressure to show rapid adoption. That pressure often translates into bold claims that sound transformative but collapse under basic scrutiny.

Some vendors claim their AI can replace adjusters or underwriters entirely. Others suggest they can interpret regulatory requirements across multiple jurisdictions without legal review. A few even imply that compliance can be automated end-to-end with no human oversight. These claims may attract attention, but they create operational and regulatory exposure for any insurer that takes them literally.

Real-world cracks are already showing

We’re already seeing the consequences of overpromised automation. AI-driven decision systems in health insurance have faced increasing regulatory scrutiny for fairness, accuracy, and transparency (NORC at the University of Chicago, “Artificial Intelligence in Health Insurance”). Automated claims systems have misinterpreted policy wording. Several document-processing tools have misread regulatory requirements, leading to filing inconsistencies and remediation (Wiley Rein Insurance Regulatory Brief).

The core issue is misalignment. Insurance is built on judgment, fairness, and accountability. When vendors oversell automation, they encourage insurers to hand core decisions to models that were never designed to carry that level of responsibility.

The industry doesn’t need louder promises. It needs technology that operates within regulatory boundaries, supports human expertise, and produces outcomes underwriters and claims leaders can defend.

Human in the Loop, Not Human Out of the Loop

Insurers don’t reject automation. They reject automation that can’t be explained, audited, or defended. The market isn’t pushing back against AI. It’s pushing back against AI that seeks to replace judgment rather than support it.

Responsible AI in insurance starts with a simple principle: models can recommend, but humans must decide. This is a regulatory expectation. U.S. and international regulators now expect insurers to maintain transparency, documentation, governance, and defined human oversight for AI systems (NAIC Model Bulletin on AI Systems).

AI can handle the work that slows people down, such as extracting data from loss runs, validating SOV totals, routing submissions, flagging anomalies, and applying rules consistently. These tasks benefit from automation because they are repeatable, high-volume, and prone to error when done manually. Automating them reduces friction without compromising oversight.

AI supports judgment, but it cannot replace it

Where automation becomes risky is in the areas where context carries more weight than data. Coverage interpretation, exception handling, liability assessment, and regulatory compliance all depend on reasoning that cannot be reduced to pattern recognition. No model today can interpret new state regulations the day they go live, justify a borderline decision to a regulator, or negotiate a complex claim with a broker.

This is why rules engines still matter. They encode the insurer’s policies, workflows, and compliance expectations. AI can help power those workflows (by surfacing information, scoring files, or spotting inconsistencies), but the rules are set by people, and the final decisions are made by people.

Transparent, governed, human-in-the-loop AI is the only approach that survives audits, protects policyholders, and keeps insurers in control of their operations. And with the EU AI Act establishing explicit requirements for transparency, human oversight, and traceability, responsible design is no longer optional

What Can Actually Be Automated Safely

(And What Cannot)

Insurers don’t need promises of “end-to-end automation.” They need clarity about which tasks can be automated without creating compliance risk, governance gaps, or reputational exposure. AI is incredibly effective in the parts of underwriting and claims where the work is structured, repeatable, and high-volume. It’s far less reliable in areas that require interpretation, reasoning, or legal judgment.

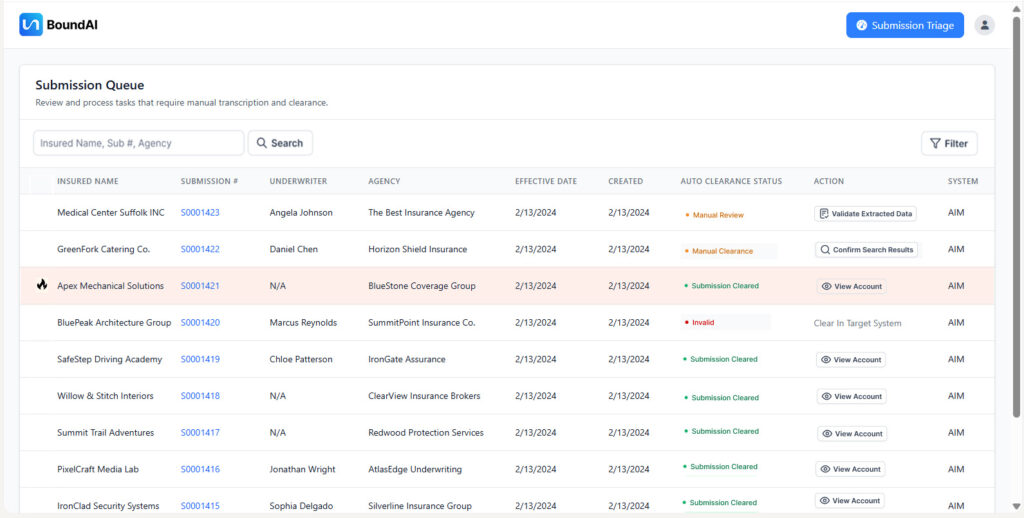

The safest automation opportunities are the ones that remove friction without touching decisions. Submission intake is the clearest example. Underwriters still spend hours downloading PDFs, renaming files, rekeying SOVs, and validating loss runs. AI solves this immediately. Tools like Bound AI can extract values from documents with high accuracy, standardize formats, validate totals, and deliver clean data into the workflow within minutes.

Where automation is safe, reliable, and high-impact

These are the tasks that can be automated today with confidence because they are rules-driven and auditable:

- Submission intake and email/document handling

- Data extraction from loss runs, SOVs, and applications

- File hygiene and document standardization

- Intake validation (missing fields, mismatched values, outdated forms)

- Appetite routing and first-pass triage

- Fraud signal detection and anomaly flagging

- On-exposure enrichment (addresses, classifications, geospatial data)

- Pre-underwriting preparation for humans

These steps don’t require judgment. They require accuracy and consistency – two things AI delivers exceptionally well.

Automation helps with structure

Where automation becomes risky is in the workflows where context matters more than patterns. AI can support underwriting and claims, but it cannot replace the reasoning behind a coverage decision, an exception approval, or a regulatory interpretation. No model should independently evaluate complex liability, issue binding claims decisions, or interpret multi-jurisdictional regulations without human review.

The same applies to claims settlement. AI can spot inconsistencies or highlight potential fraud, but it cannot negotiate outcomes or make determinations that require fairness and empathy. And while AI can speed up compliance steps, like ensuring required documents are present, it cannot replace the interpretation of state-specific filings or nuanced regulatory updates.

Bound AI was built around a simple principle: automate the work that should be automated, support the work that requires judgment, and never allow the model to act as the decision-maker. AI handles the structure; humans handle the judgment. When both are used where they belong, insurers gain speed, consistency, and operational clarity without compromising control, compliance, or trust.

The Risk Everyone Ignores: Insurtech Financial Stability

Technology risk in insurance isn’t just about models, accuracy, or automation. It’s about survivability. A surprising number of Insurtechs operate with short runways, high burn rates, and no clear path to profitability. Yet these same companies ask carriers and MGAs to outsource critical processes, sometimes even core operational workflows, to platforms that may not exist in two years.

This is the part of the conversation the industry avoids. But it’s the most important. If a vendor collapses, insurers inherit the operational debt: dead integrations, unsupported workflows, compliance gaps, unfinished implementations, and a scramble to rebuild processes that were never meant to return in-house.

Funding data makes the trend impossible to ignore. Insurtech investment has dropped sharply since its 2021 peak, and several high-profile vendors have already downsized, merged under financial pressure, or shut down entirely. Despite these realities, some continue to promise sweeping automation. Promises they cannot afford to build responsibly, govern correctly, or support long-term.

The best AI is worthless if the company behind it can’t survive

Insurers depend on long-term stability. Claims systems, underwriting platforms, and compliance workflows are infrastructural tools. When a vendor offering core automation is financially unstable, the insurer inherits the risk of vendor failure, operational disruption, and regulatory exposure. An insurer’s technology strategy is only as strong as the financial health of the partners behind it.

Responsible innovation requires more than smart algorithms. It requires a business capable of supporting those algorithms five years from now. Bound AI was built with that principle in mind. Sustainable technology means proven operations, transparent governance, and long-term viability, rather than flashy demos or aggressive promises.

Insurers need AI from companies built to last.

The New Vendor Checklist: Six Questions Every Insurer Should Ask Before Buying AI

Insurers evaluate risks for a living, yet the same level of scrutiny rarely gets applied to Insurtech vendors. That has to change. The difference between a sustainable AI partnership and a future operational crisis often comes down to the questions asked before a contract is signed. A vendor should be removed from consideration immediately if they cannot answer these six areas clearly and transparently.

1. Explainability – Can they show how every decision is made?

If a vendor can’t walk you through how their model arrives at its recommendations, you’re not buying responsible AI. You’re buying a black box. Every output must be reconstructable, reviewable, and defensible.

2. Human-in-the-loop – Where exactly does human oversight occur?

Ask them to show the points where humans intervene, override, or validate model outputs. If the vendor positions human oversight as optional or “only needed for edge cases,” walk away.

3. Rules engine – Do you control the rules, or do they?

Insurers must control their own workflows, decision criteria, and compliance logic. The model can support the rules, but it cannot define them.

4. Auditability – Can they produce clean, traceable audit trails?

Every input, every flag, every override, and every decision must be logged. Without this, you cannot withstand regulatory review or partner audits.

5. Operational track record – Have they actually run insurance operations?

AI alone is not enough. Vendors need real experience in underwriting, claims workflows, E&S processes, and specialty operations to automate them.

6. Financial stability – Will they still be here in five years?

Ask about runway, burn rate, and long-term viability. A collapsing vendor creates more risk than any flawed model ever will.

These six questions expose the difference between vendors selling responsible technology and those selling a pitch. Procurement is no longer about features. It’s about governance, continuity, and trust.

The Bottom Line

Insurance doesn’t need bigger claims from vendors. It needs technology that can withstand audits, support real operations, and survive market volatility. The future belongs to Insurtechs that build responsibly, those that combine automation with oversight, speed with transparency, and AI with human judgment.

The path forward is clear. Automate the work that is structured, repetitive, and high-volume. Keep authority, interpretation, and accountability in human hands. Work with vendors who are transparent, financially stable, and operationally proven.

Insurers that follow this path will get what they were promised all along: faster workflows, cleaner data, stronger compliance, and technology that actually lasts. In a market full of hype, responsible AI is the only real competitive choice.