Straight-through processing is often held up as the gold standard of insurance automation. Higher STP rates are equated with maturity, efficiency, and operational excellence. For many insurance leaders, the logic feels obvious: the more work that flows through the system without human touch, the better the outcome.

But in practice, this thinking has created a distorted view of what success actually looks like.

When it’s an end goal, especially in specialty and commercial lines, straight-through processing pushes organizations to automate decisions they shouldn’t. The result isn’t cleaner operations or better underwriting, but hidden risk, forced exceptions, and manual work resurfacing later in the process.

In lines of business where risk is nuanced, submissions are document-heavy, and judgment matters, chasing full STP can do more harm than good.

This article challenges the assumption that more automation always means better outcomes. It explains where straight-through processing insurance models actually work, where they break down, and why partial automation with human checkpoints consistently outperforms “full STP” fantasies in real-world insurance operations.

Contact the Bound AI team to learn how we can help your business.

Where Straight-Through Processing Actually Works

Straight-through processing delivers real value in the right context, to the right types of work. The problem is that many insurers assume what works in one line of business should work everywhere.

STP performs best when three conditions are present: the risk is standardized, the data is structured, and the decision logic is stable. In these environments, automation removes unnecessary handling.

Personal lines are the clearest example. Submissions follow consistent formats. Rating inputs are predictable. When data arrives clean, and rules rarely change, full STP improves speed, lowers cost, and produces reliable outcomes.

The same is often true for simple SME products or narrowly defined endorsements. When eligibility criteria are clear and coverage options are fixed, automation can safely carry a submission from intake to bind with minimal human involvement.

The key point is variability. STP succeeds where variability is low. The moment risk profiles become nuanced, documents become inconsistent, or exceptions become the norm rather than the edge case, the assumptions that make full STP viable start to collapse.

Where STP Breaks Down in Specialty and Commercial Lines

Straight-through processing starts to unravel the moment it’s applied to work that depends on judgment rather than rules. Specialty and commercial insurance are built on variability, and that variability is exactly what full STP struggles to handle.

Specialty submissions rarely arrive in clean, standardized formats. They come with layered documentation, broker-specific templates, and supporting materials that don’t always align. Loss runs may contradict applications. Schedules of values may change between versions.

More importantly, appetite in specialty lines isn’t binary. Decisions depend on context: prior loss behavior, exposure nuances, portfolio balance, and carrier-specific tolerances. These factors don’t map cleanly to rigid rules without stripping out the very judgment that makes specialty underwriting effective.

When full STP is forced onto this kind of work, the process doesn’t become cleaner. It becomes brittle. Submissions are auto-declined because one field doesn’t fit a predefined rule. Quotes are generated that require correction later. Exceptions pile up without clear ownership.

Human work doesn’t disappear. It simply moves downstream, where it’s harder to track, audit, and control. Underwriters end up making decisions after the fact instead of shaping them upfront. Compliance teams inherit risks that automation cannot manage.

High Automation Rates, Low Operational Truth

Many organizations proudly report rising STP rates as proof that automation is working. Dashboards show fewer touches, faster throughput, and more “straight-through” files. On paper, the operation looks efficient. On the ground, it often tells a different story.

High STP rates can mask the extent of manual effort that remains. Auto-processed submissions frequently require follow-up corrections, clarification emails, or post-bind cleanup. Overrides happen quietly. Manual checks are outside the system. What appears straight-through is often only straight-through until someone fixes it later.

This creates a dangerous gap between reported efficiency and actual control. Leaders see progress. Operators see risk. Decisions are being pushed forward without enough context, and the work required to correct them is no longer visible in the metrics that matter most.

STP becomes a reporting achievement rather than an operational one. The focus shifts to maximizing automation percentages instead of improving decision quality, data accuracy, or compliance integrity. Over time, this disconnect erodes trust. Internally with underwriting teams and externally with carriers and regulators.

In specialty and commercial environments, the illusion of full STP is often more costly than admitting where you still need human judgment.

Why Partial Automation + Human Checkpoints Outperforms Full STP

The most effective insurance operations don’t try to eliminate people from the workflow. They design automation around where people add the most value. That’s the difference between chasing full STP and building a system that actually scales.

Partial automation focuses on removing friction. It targets the work that slows teams down without improving decisions: document intake, data extraction, validation, routing, and prioritization. These steps are repetitive, rules-driven, and error-prone when handled manually. Automating them improves speed and consistency without introducing risk.

Human checkpoints remain where context matters. Underwriters review structured, validated data instead of raw documents. They handle exceptions, interpret coverage nuances, and make final decisions that require experience and accountability. Compliance teams see clearer audit trails because decisions are intentional.

This hybrid model delivers what full STP promises but rarely achieves: faster turnaround with better control. Files move quickly because the noise is removed early. Decisions improve because humans engage at the right moments. And risk stays visible instead of pushing it into hidden overrides.

In specialty and commercial lines, performance comes from balance. Automate the work machines do best. Preserve human judgment where it matters most.

How to Redefine STP Success

If straight-through processing is measured only by how few times a human touches a file, it encourages the wrong behavior. It rewards automation for its own sake instead of the quality or durability of the outcome. In specialty and commercial insurance, that mindset creates blind spots.

STP success should be measured by decision quality and operational stability. Faster isn’t better if it leads to rework. Touchless isn’t progress if exceptions are handled later, off-system, or without clear accountability.

A healthier way to evaluate STP focuses on outcomes that matter in real operations. How quickly can a clean, accurate quote be delivered? How often do files require correction after automation? Are exceptions clearly identified and intentionally handled? Do audit results improve as automation expands, or do findings increase?

When these metrics move in the right direction, automation is working. When they don’t, higher STP percentages are just noise.

Redefining STP success means shifting the goal from “fewer touches” to “better decisions, sooner, with control.”

How Bound AI Enables Practical STP Without the Fantasy

Bound AI was built with a clear view of where straight-through processing actually adds value and where it becomes dangerous. Instead of chasing full STP across every decision, Bound AI focuses on making the right parts of the workflow straight-through.

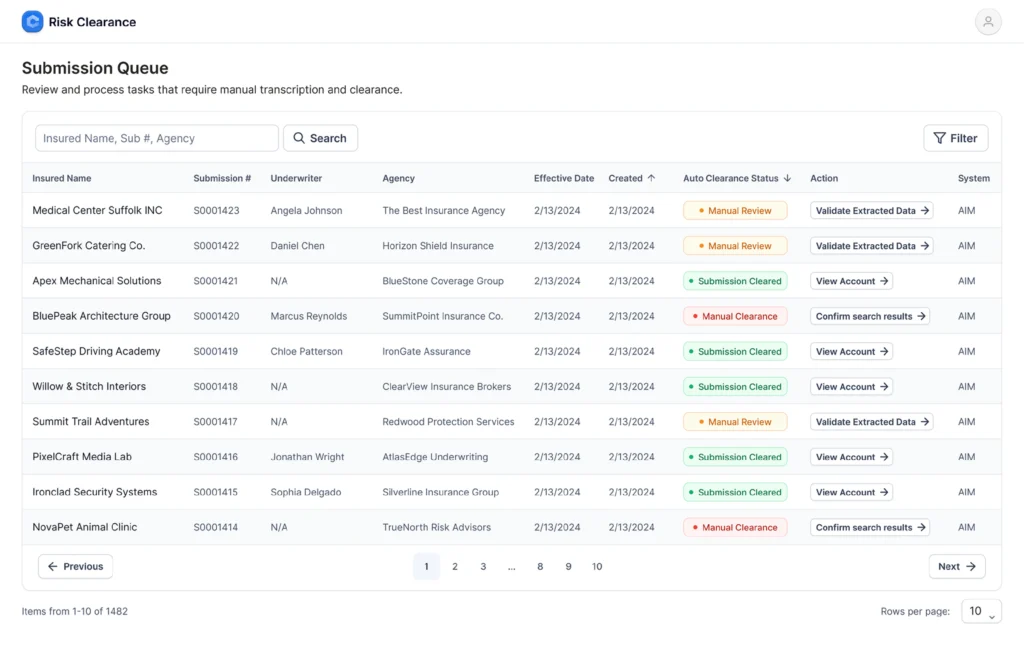

The starting point is intake. Specialty and commercial submissions are document-heavy and inconsistent, which is where most STP initiatives fail. Bound AI automates document ingestion, data extraction, validation, and cross-checking across loss runs, SOVs, applications, and supporting files.

What moves straight through is the work that should: clean data, validated inputs, structured files, clear routing, and prioritization. What doesn’t move straight through is judgment. Underwriters receive accurate, traceable information early, so decisions are faster and more consistent without being automated away.

This creates intentional checkpoints. Exceptions surface early. Edge cases are visible. Decisions are explainable. Human involvement isn’t a failure of automation, but a design choice.

Bound AI enables STP where it makes sense and preserves human control where it matters. The result is speed without opacity, automation without hidden risk, and workflows that scale without breaking under real-world complexity.

The Bottom Line

Straight-through processing is not a measure of maturity. In specialty and commercial insurance, it’s often a warning sign when applied without restraint.

The strongest operations don’t aim for zero-touch workflows. They aim for low-friction, high-control workflows. They automate the work that benefits from consistency and scale, and they protect the decisions that require judgment, context, and accountability.

Insurance leaders who stop chasing automation percentages and start designing balanced workflows will move faster, make better decisions, and carry less risk. The goal isn’t to remove humans from the process. It’s to make every human decision better by removing everything that shouldn’t require one.

Contact the Bound AI team to learn how we can help your business.