The insurance industry is investing heavily in artificial intelligence. More advanced models, predictive power, and promises of smarter underwriting decisions. But behind the excitement, there’s a problem most teams avoid talking about.

AI in underwriting fails because the data feeding the models is broken.

Loss runs don’t match the application. Schedules of values conflict across documents. Key fields are missing, outdated, or manually rekeyed. Yet insurers keep layering more sophisticated models on top of this foundation, expecting better outcomes. What they get instead are confident predictions built on inaccurate inputs.

Underwriting data accuracy matters more than AI sophistication. A complex model trained on messy data will underperform every time against a simpler model powered by clean, consistent inputs.

If insurers want AI to improve underwriting decisions, they have to start where underwriting actually begins with accurate, structured data.

Contact the Bound AI team to learn how we can help your business.

The Dirty Reality of Underwriting Data

Underwriting data rarely starts its life in clean, structured systems. It starts in emails, PDFs, spreadsheets, and broker templates that were never designed to feed AI models. By the time that information reaches an underwriting platform, it has often been touched, transformed, and reinterpreted multiple times.

Why Underwriting Data Is Inherently Messy

Every submission brings variation. Brokers use different formats. Applications change by carrier, line, and state. Loss runs arrive as scanned PDFs, exports, or handwritten notes. Schedules of values may be split across multiple files or embedded inside broader documents.

Underwriters and assistants have to bridge these gaps manually. They extract values, reconcile differences, and make judgment calls just to move the file forward. Even when the work is done carefully, small inconsistencies slip through because the workflow is fragmented by design.

Where Accuracy Breaks Down First

The first cracks usually appear at intake. Data is pulled from unstructured documents where context matters and formatting varies. Totals don’t align across documents. Fields are missing or populated differently depending on the source. Versions change, but older values remain in circulation.

Manual fixes compound the issue. A value corrected in one system may not be corrected everywhere else. Over time, the file stops representing a single source of truth. It becomes a collection of assumptions held together by experience rather than verified data.

These issues are the baseline reality for most underwriting teams. And when inaccurate data becomes the foundation for analytics or AI-driven decision support, every downstream output inherits the same uncertainty.

How Inaccurate Data Quietly Destroys AI Outcomes

AI systems don’t question the quality of the data they receive. They assume it’s correct. Once inaccurate information enters the workflow, the model doesn’t slow down or raise its hand. It moves forward with confidence, producing outputs that look precise but are fundamentally flawed.

Bad Inputs, Confidently Wrong Outputs

When loss data is incomplete, exposure values are inconsistent, or key fields are missing, AI doesn’t compensate for uncertainty. It optimizes around it. A small error in a schedule of values can cascade into incorrect risk scores. A mismatched loss run can distort pricing recommendations. A missing data point can push a submission into the wrong appetite category.

The danger is that these outcomes rarely look broken. They look reasonable. That’s what makes them risky. Inaccurate data doesn’t cause obvious system failures. It produces decisions that appear valid but are quietly misaligned with reality.

Where the Damage Shows Up

Over time, the effects compound. Risks are mispriced. Appetite boundaries blur. Declination logic becomes inconsistent. False positives and false negatives increase because the inputs feeding it are unreliable.

Perhaps most damaging is what happens to feedback loops. When underwriting outcomes, claims results, and portfolio performance are analyzed later, the insights drawn from that data are skewed. Teams end up tuning models based on distorted signals, reinforcing the very issues they’re trying to fix.

AI doesn’t fail loudly when data accuracy is poor. It fails quietly, at scale, and with growing confidence.

Why Insurers Overvalue Model Sophistication

There’s a reason insurers keep reaching for more advanced models when results don’t improve. Sophistication feels like progress. New algorithms, richer features, and more complex architectures are easy to point to as evidence of innovation. Data accuracy, by contrast, looks operational. It lives in intake workflows, document handling, and validation rules, areas that rarely get executive attention.

The Appeal of “Smarter” Models

Advanced models are visible. They make for strong board-level updates and vendor demos. They promise differentiation through intelligence rather than discipline. When underwriting performance stalls, it’s tempting to assume the answer lies in better prediction rather than better inputs.

But this logic ignores how underwriting actually works. Most underwriting decisions don’t fail because the model lacks sophistication. They fail because the data doesn’t reflect the true risk. A model can only be as good as the information it receives.

The Misplaced ROI Assumption

There’s also a widespread assumption that sophistication equals return. Teams expect that more complex models will automatically unlock better loss ratios or faster growth. In practice, the biggest performance gains usually come from improving data quality: fewer corrections, overrides, and downstream issues.

A simpler model fed with accurate, consistent data will outperform a sophisticated one trained on noise. It will produce more stable results, be easier to explain, and require less intervention from underwriters trying to correct for bad inputs.

Until insurers treat underwriting data accuracy as a strategic priority, AI investments will continue to underdeliver, no matter how advanced the models become.

Underwriting Data Accuracy as the Real AI Foundation

If insurers want AI to improve underwriting outcomes, they need to rethink where that improvement actually begins. It doesn’t start with models. It starts with the data those models depend on.

Accurate underwriting data isn’t about perfection. It’s about consistency, traceability, and trust in the inputs that drive decisions.

What “Accurate Data” Really Means in Underwriting

In an underwriting context, accuracy goes beyond correct numbers. It means that values align across documents, that fields are structured the same way every time, and that the source of each data point is clear. It means knowing which version of a schedule is current, which loss run is authoritative, and which application fields are missing versus intentionally left blank.

Most importantly, it means minimizing manual rekeying. Every time data is copied from one place to another, the risk of distortion increases. Over time, small inconsistencies compound, and the file drifts further from reality.

Why Document Intelligence Comes First

Underwriting data doesn’t originate in databases. It originates in documents. PDFs, spreadsheets, scanned forms, and email attachments are the raw materials of underwriting. If those documents aren’t extracted accurately and validated consistently, no amount of downstream analytics can fix the problem.

This is where AI delivers the most immediate value by creating structure. Accurate extraction, cross-document validation, and consistency checks ensure that underwriters and models are working from a single, reliable version of the truth.

When underwriting data is clean at the point of intake, everything downstream improves. Models behave more predictably. Underwriters trust the outputs they see. Portfolio analysis reflects reality instead of approximation. Accuracy becomes the foundation on which all other AI capabilities can safely build.

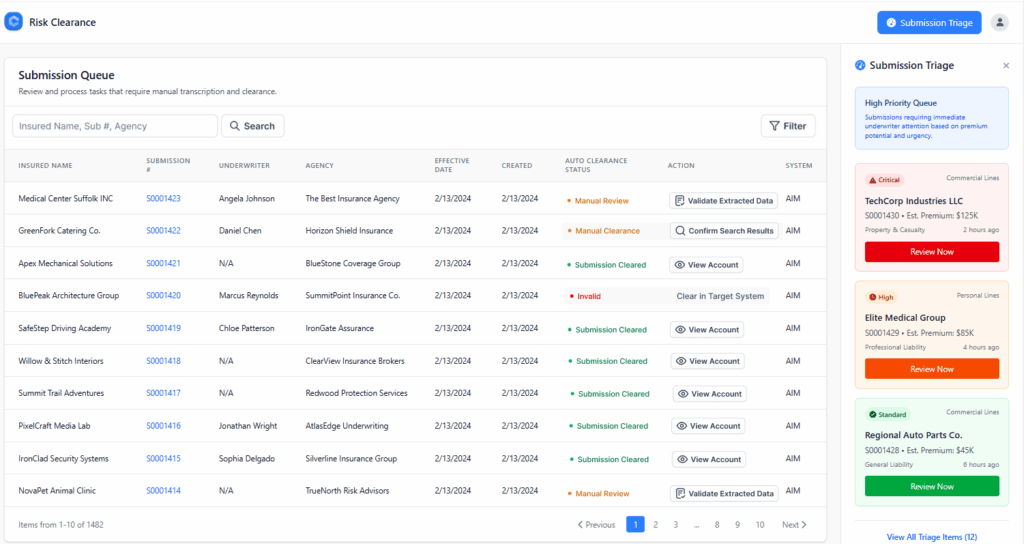

How Bound AI Approaches Underwriting Data Accuracy

Most AI platforms in insurance start by making decisions more intelligently. Bound AI starts by making the data right. That distinction matters because no underwriting decision, human or machine, can outperform the quality of the information on which it is based.

Bound AI was built for the reality of underwriting. Submissions arrive messy. Documents conflict. Data lives in PDFs, spreadsheets, and emails long before it ever reaches a system. Rather than assuming clean inputs, Bound AI is designed to operate in this environment and impose order on it.

The focus is on accuracy first. Bound AI’s document intelligence agents extract data from loss runs, SOVs, applications, and supporting documents with insurance-specific context in mind. Values are validated, totals are checked, and inconsistencies across documents are flagged before they move downstream. This reduces the silent errors that undermine underwriting decisions and portfolio analysis.

Just as importantly, Bound AI preserves traceability. Every extracted value can be tied back to its source. Underwriters can see where the data came from, verify it, and correct it when needed. This transparency builds trust and allows AI to function as support rather than a black box.

By prioritizing clean, structured, and explainable data at intake, Bound AI creates a reliable foundation for everything that follows, whether that’s human underwriting judgment, rules-based workflows, or more advanced decision support. The goal isn’t to replace underwriters. It’s to ensure that every decision they make is grounded in accurate information.

The Bottom Line

AI doesn’t create intelligence on its own. It amplifies whatever already exists in the underwriting process. When the data is inaccurate, inconsistent, or incomplete, AI scales the problem faster and with more confidence. When the data is clean, structured, and reliable, AI becomes a true force multiplier.

Insurers don’t lose underwriting performance because their models aren’t advanced enough. They lose it because their inputs don’t reflect reality. No amount of sophistication can compensate for broken data flowing in at intake.

The future of underwriting AI won’t be defined by who builds the most complex models. It will be defined by who builds the cleanest data foundation. Insurers that prioritize underwriting data accuracy first will see better decisions, stronger portfolios, and AI that actually delivers on its promise.

Contact the Bound AI team to learn how we can help your business.